We’ve all been there. A fragment of a melody, an earworm from a movie, or a half-remembered tune from years ago gets stuck in your head, and it’s maddening. For years, Google’s “hum to search” feature has been the gold standard for solving these musical mysteries. Now, that powerful tool is making its way to a new home: Gemini.

In a move that further solidifies Gemini’s role as Google’s all-in-one AI assistant, the ability to identify a song by playing, singing, or humming it is rolling out to the Gemini app on Android.

But is this just a new coat of paint on a classic feature, or a genuine upgrade? Let’s take a closer look.

How to Get Gemini to Hear Your Melody

Unlike the dedicated microphone or music icon you might be used to in the Google Search widget, activating this feature in Gemini requires a more conversational approach.

- Open the Gemini app on your Android device.

- Type a prompt like “What song is this?” or “Identify this song.”

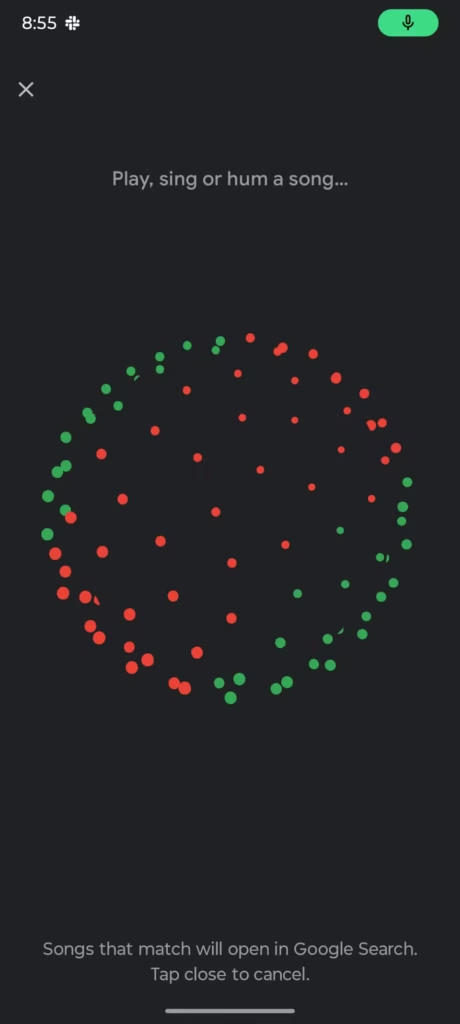

- This will trigger a new, full-screen listening interface, prompting you to begin.

- Simply play, sing, or (more likely) hum the tune that’s stuck in your head.

- Gemini will then process the audio and present you with a list of potential matches from Google Search, complete with confidence percentages to help you pinpoint the correct one.

An Old Feature in a New Home

It’s important to understand that this isn’t a brand-new technology. Google’s machine learning models have been exceptionally good at this for a while. This update is about integration—porting a beloved feature from the core Google app directly into the AI chat experience.

However, the expert take is that the user experience has changed slightly. The original “hum to search” is easily accessible via a one-tap button on the Google Search widget. In its current form, the Gemini integration requires you to open the app and type out a prompt, which arguably adds a bit of friction to the process. It’s a small difference, but one that power users will notice.

The Bigger Picture: Gemini, the Great Consolidator

This move is another clear signal of Google’s long-term strategy. Gemini is slowly but surely absorbing the features and responsibilities of Google Assistant and other standalone Google tools. The goal is to create a single, powerful, conversational interface where you can do everything.

The potential here is huge. Imagine a future where you can hum a tune and follow up with, “Great, now add this to my ‘Road Trip’ playlist on YouTube Music and find tour dates for the artist.” That’s the promise of a consolidated AI.

The challenge for Google is to ensure that in the process of migrating these features, they don’t lose the simple, one-tap convenience that made the original tools so useful in the first place.

Having song identification directly within Gemini is a logical and welcome addition. It makes the AI more capable and context-aware. For users who are already deep in a conversation with Gemini, it will be a seamless way to solve a musical mystery.

However, in its current prompt-based form, it feels less like a revolutionary new feature and more like a “side-grade.” It doesn’t necessarily do the job better or faster than the classic Google Search button, it just does it in a different place.

We’re excited to see this integration evolve. If Google can combine the deep conversational power of Gemini with the effortless convenience of its best-in-class tools, the future of personal assistants will be very bright indeed.